Recently we shipped a preview of React Router with support for React Server Components (RSC) as well as low-level APIs for RSC support in Data Mode. With the release of React Router v7.9.2, we’re excited to announce that preview support for RSC is now also available in Framework Mode.

To get started, you can quickly scaffold a new app from our unstable RSC Framework Mode template:

npx create-react-router@latest --template remix-run/react-router-templates/unstable_rsc-framework-modeReact Router has been developing Framework Mode RSC for some time. Here’s a demo:

Mark continues:

To enable RSC Framework Mode, you simply swap [the React Router Vite plugin] out for the new

unstable_reactRouterRSCVite plugin, along with the official (experimental) @vitejs/plugin-rsc as a peer dependency.

npm install @vitejs/plugin-rscimport { defineConfig } from "vite";

import { unstable_reactRouterRSC } from "@react-router/dev/vite";

import rsc from "@vitejs/plugin-rsc";

export default defineConfig({

plugins: [

unstable_reactRouterRSC(),

rsc(),

],

});The build output changes from a React Router ServerBuild to a request handler function with the signature (request: Request) => Promise<Response>.

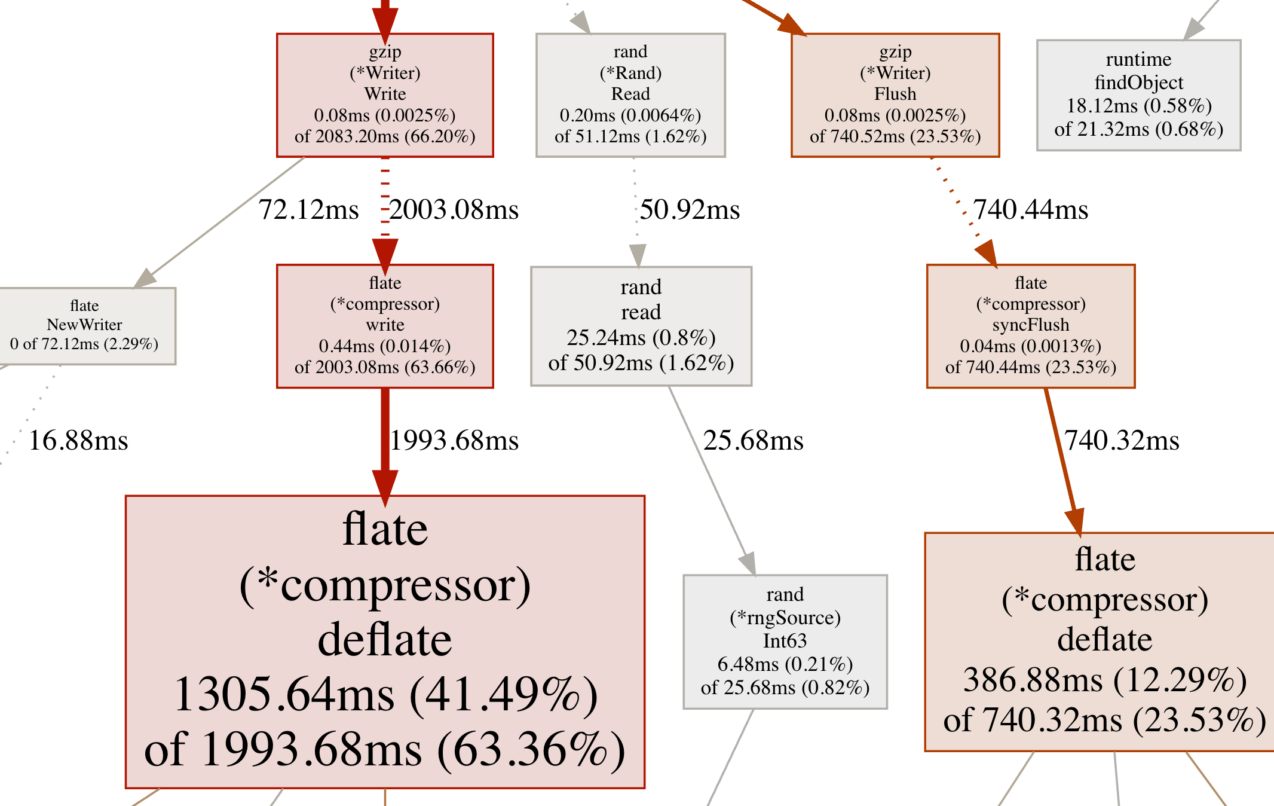

The heavy lifting was moved into RSC Data Mode making the RSC Vite plugin much smaller than the stable Vite plugin:

As noted in our post on “React Router and React Server Components: The Path Forward”, the great thing about RSC is that this new Framework Mode plugin is much simpler than our earlier non-RSC work. Most of the framework-level complexity is now implemented at a lower level in RSC Data Mode, with RSC Framework Mode being a more lightweight layer on top.